Facebook enables automated scams, but fails to automate the fight against them

Scammers massively use Facebook’s advertising platform using so-called “cloakers” to evade automated checks. They would be very simple to detect but, despite announcements to the contrary, Facebook seems to tolerate them.

Facebook’s targeted advertising revolutionized confidence tricks, or scams, by which a criminal convinces his (the criminals are almost exclusively male) victims to give him money in exchange for future rewards, which never materialize. Scamming is not new. Virtually all email users have at some point received a “Nigerian prince” message offering them access to a hidden treasure – in exchange for a small advance payment.

Whereas finding victims used to be a hit-or-miss affair, Facebook does it automatically. Its advertising platform allows scammers to target potential victims while its machine learning algorithms automatically identify users most likely to click on the scam, as a Bloomberg Businessweek reported in 2018. A recent Buzzfeed investigation showed that, advertising a typical scam, a company paid Facebook $44,000 and made $79,000 in return from the scam's victims.

Cloaked ads

Because Facebook’s policies prohibit advertising for misleading claims, scammers use a technique known as “cloaking,” which allows for filtering the content users see when they click on a link. Criminals can make sure that Facebook’s robots in charge of automatically vetting each ad are served one piece of harmless content while potential victims see the page containing the scam.

In August 2017, Facebook announced that it would address cloaking. They wrote that they would use “artificial intelligence and expand [their] human review processes to identify, capture, and verify cloaking.”

Since then, however, scammers have seemed able to continue advertising on the platform using cloaked ads. We could confirm at least six cases of cloaked ads since August 2019, which were seen by thousands of Facebook users in Germany. At least two victims mentioned that they had been defrauded as a result.

While several media outlets reported on the scams, which often use false celebrity endorsement and fake the design of reputable news organizations, the technicalities of cloaking are rarely, if ever, covered in the press.

Pablo Bablobar vs. Facebook

There are legitimate uses for cloaking. A website owner might want to keep robots from accessing content to prevent illegitimate data acquisition, for instance. However, most of the cloaking solution we reviewed made little efforts at hiding the nature of their clients' activities. One such service, cloaki.ng, despite a legitimate-looking homepage, drops all pretense of respectability when users reach the footer by displaying “does our landing page made it's work well? Cool, welcome on board, bro!” The operator of the service goes by the name of “Pablo Bablobar”.

The cheapest cloaking solutions start at $300 (€275) per month, thus hinting at the amounts required to run a cloaking operation profitably. We could not find any cloaker willing to let us try their solution for journalistic purposes.

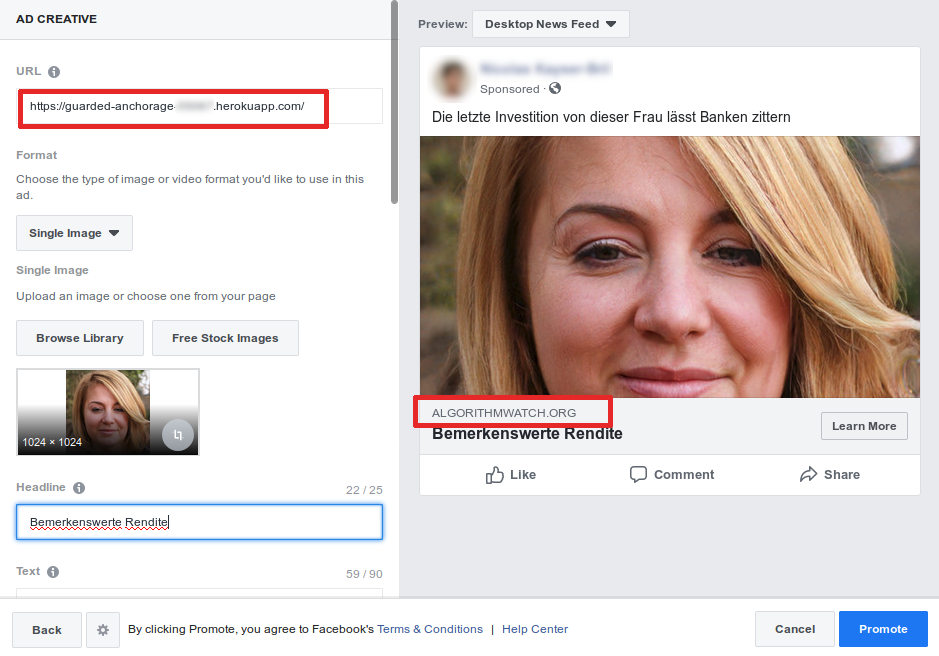

To test the effectiveness of Facebook’s efforts against cloakers, we built one ourselves. In just 16 lines of code and using a list of IP blocks freely available online, our cloaker sends all visitors from German IP addresses to a scam, while others are redirected to the homepage of AlgorithmWatch through a 302 HTTP redirection, a standard way to redirect users from one page to another.

Approved in 90 minutes

We advertised the link to the cloaker on Facebook using a stock photo and the headline “This woman's latest investment makes banks tremble.” The ad, which was in German, was targeted to people within Germany. Despite this obvious cue, Facebook’s crawlers automatically checked the ad from IP addresses outside of Germany and processed the ad as if it were a link to the homepage of AlgorithmWatch. After slightly more than 90 minutes of review, the ad was approved and running. (We suspended the campaign immediately after approval.)

AlgorithmWatch talked to several security experts about cloaking. While they all agreed that criminals might use sophisticated methods to make cloaking hard to detect, they could see no reason why Facebook would not verify ads using IP addresses from the country being targeted by an advertiser.

Some of the cloaked advertising that AlgorithmWatch reviewed were taken down within 48 hours. Others, however, remained online for weeks, even after users wrote in the comments section that the ad was a fraud.

AlgorithmWatch sent a detailed list of questions to Facebook, which they declined to answer. A spokesperson simply wrote in a statement that Facebook had “measures” in place against cloaking. He did not specify which ones.

The automation of scamming probably claimed dozens of thousands of victims in the European Union. Automating its prevention by clamping down on advertisers, at least those who use cloaking, would be technically feasible. Regulators should ask Facebook what takes them so long to enforce their own advertising policies.

Did you like this story?

Every two weeks, our newsletter Automated Society delves into the unreported ways automated systems affect society and the world around you. Subscribe now to receive the next issue in your inbox!