The Insta-mafia: How crooks mass-report users for profit

Groups of teenagers use weaknesses in the notification systems of Facebook and Instagram to take over accounts and harass users. The upcoming DSA regulation addresses the issue but is likely to fall short.

Lire en français: Comment de petits escrocs font fermer des comptes Instagram

Auf Deutsch lesen: Die Insta-Mafia: Kleinkriminelle melden Nutzer·innen massenhaft für Profit

Emma* is a Dutch teenager from a small town on the North Sea shore. Over the past two years, she built an Instagram presence that brought her, as of November 2020, over 20,000 followers. Then, around 18 November, it was gone.

We contacted Emma on Instagram to learn about her experience dealing with abuse on the platform. Like many content creators, especially women, she is regularly reported to Facebook, which owns Instagram. Once, she told us, her account was disabled after someone told Facebook she was impersonating someone else. A “stupid” proposition given the amount of selfies she posts, she said. Emma eventually managed to get her account back after she talked to a representative from Facebook. In total, she was reported “five or six times” in two years and always managed to get her account back. But not this time.

Small-time crooks

Two days after we talked to her, Emma’s account was gone (as of 22 January 2021, it is still unavailable). We knew she was in danger because her Instagram username appeared in a Telegram group we were monitoring. Thousands of kilometers away from her home, she had been designated a target by a group of small-time criminals based in the Middle-East.

This few dozen teenage men who, judging from the Arabic they use, live mostly in Iraq and Saudi Arabia, take pride in booting Instagram users off the platform. They advertise their successes in the Stories of their own Instagram accounts, sometimes posting several such feats per day.

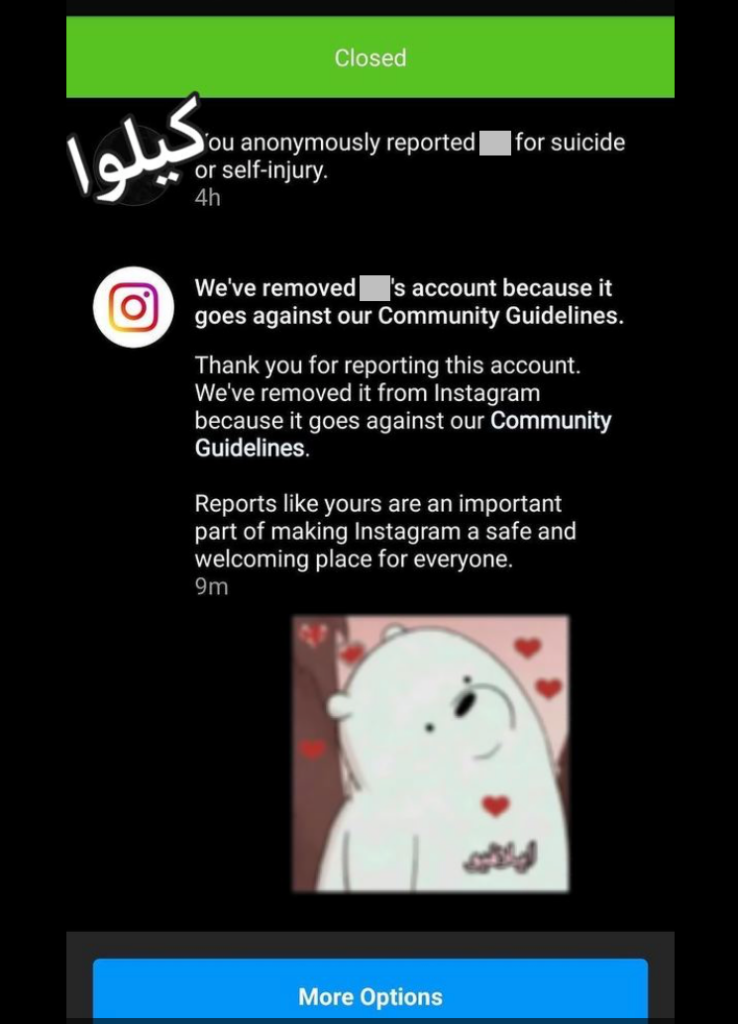

Their method is simple. They use basic scripts, which are available on Github, a platform to share computer code, to automatically report Instagram users. Using several Instagram accounts, a single criminal can report a user hundreds of times in a few clicks. Most of the time, the criminals report users for spam, impersonation, or for “suicide or self-injury”, a possibility introduced by Instagram in 2016. After receiving several such reports, Facebook suspends the targeted account.

A 16-year-old from Iraq who goes by the name “Zen” and takes part in the scheme told us that, once an account is suspended, he contacts Facebook by email and claims that he is the account’s rightful owner. Facebook asks him, in a process that seems automated, to send a picture of himself holding a piece of paper with a handwritten 5-digit code. Upon reception of the photo, Facebook gives him ownership of the suspended account, which is reactivated. Once in control, the account is resold between 20 and 50 US dollars depending on the number of followers. Zen says buyers simply want more followers, but it is likely that the accounts are resold. Accounts with a few thousand followers can fetch up to 200 US dollars on platforms like SocialTradia.

Facebook declined to answer our precise questions on the record, and sent us this statement instead: “We don’t allow people to abuse our reporting systems as a way of harassing others, and have invested significantly in technology to detect accounts that engage in coordinated or automated reporting. While there will always be people who try to abuse our systems, we’re focused on staying one step ahead and disrupting this activity as much as we can.”

Chrome extension for mass report

We reviewed the tools used by the criminals. Using them requires no specific skills, and they can usually be run from a smartphone. Wrongfully and automatically reporting users on Facebook and Instagram is so easy that it is commonly used by small-scale criminals around the world.

Another group, operating from Pakistan, built a Chrome extension that allows for mass-reporting users on Facebook. A license for the tool costs 10 US dollars. The unlicensed version gives access to a list of targets, which is updated about twice a month. In total, we could identify about 400 unique targets in forty countries.

It is unclear how targets are selected by the Pakistani group. They include mostly practitioners of Ahmadiyya Islam, a religious movement that started in Punjab, Baloch and Pashtun separatists, as well as LGBTQ+ advocates, atheists, journalists and feminists, in Pakistan and beyond. The criminals mostly report them under the headings “fake account” or “religious hatred”.

Mahmud*, an avowed atheist living in Belfast, appeared on one of their target lists. Because Facebook provides no information to people who are reported, he does not know whether the group actually attacked him. But he has been mass-reported before and says that “hundreds” of his posts have been blocked over the last seven years.

Thousands of victims

Mass-reporting is pervasive. Judging from the dozens of code snippets available on Github and the thousands of video tutorials on YouTube, it is likely that many more groups operate like the two criminal operations we monitored.

We surveyed a non-representative sample of 75 Facebook users in Great Britain. 21 of them told us they had already been blocked or suspended by the platform. A 56-year-old from Scotland ran a group called “Let Kashmir Decide”. She told us that Facebook disabled it in September 2020, arguing that it “violated community standards”, likely as a result of mass reporting.

People who were repeatedly harassed and mass-reported on Facebook can feel that keeping a presence on the social network costs too much energy. A 30-year-old living in Berlin told us that she gave up on social media after repeated abuse, including being wrongfully reported. She said that this impacts her ability to take part in civic life because much of the public sphere moved to social media. But the impact could be larger. As more social life moves to Facebook in the wake of the Covid pandemic, not being on Facebook can become a serious handicap. Some hospitals in Germany, for instance, moved their public meetings to Instagram Live, making it impossible for non-members to join.

The effects of mass-reports can be invisible but still potent. According to some users, being reported can lead to a shadow-ban (meaning that one’s posts are much less likely to appear in the newsfeeds of other users or in the search results). In one experiment, an Instagram user found that her posts did not appear in the “explore” tab of the service after she was reported. A professional in online marketing told AlgorithmWatch that a Facebook representative confirmed that accounts that are often reported can, indeed, be shadow-banned.

Although mass-reporting likely affects thousands of Europeans, it remains smaller than other forms of harassment such as insults.

The DSA to the rescue

On December 15, the European Commission proposed new rules to address the flaws of the “report this user” feature, as part of the Digital Services Act. The Act still needs to go through the European Parliament and the European Council before it becomes law.

The European Commission’s proposal would force very large platforms, such as Facebook and Instagram, to be more transparent towards users when a piece of content is reported and removed. In particular, platforms will have to build complaint-handling systems where reported users can contest the decision.

Facebook already has such a system in place. However, every person we spoke to who fell victim to mass-reporting groups told us that recourse mechanisms were illusory. Emma, the Dutch teenager who recently lost her Instagram account, said she previously had to switch her Instagram to a “business” account to be able to contact someone at Facebook. Her contact turned out to be from Facebook France, although she is from the Netherlands.

Trista Hendren, who lives in Norway, reports similarly arbitrary procedures. As a publisher of feminist books, she has been targeted repeatedly over the last eight years, most recently by the Pakistani group we monitored. She said that official procedures at Facebook never led to any action and that personal contact with Facebook employees were the only way to get an account or a post reinstated.

Free speech for the rich

The Digital Services Act of the European Commission proposes to create additional structures to resolve these issues. It foresees the creation of out-of-court settlement bodies to take over disputes that internal mechanisms were unable to resolve.

Josephine Ballon is a lawyer working for HateAid, a non-profit that helps victims of hate speech online. She told AlgorithmWatch that while the independent settlement bodies are a good thing, the DSA says nothing of their localization. Victims might have to start legal proceedings outside of their country, where the legislation could be different. In general, she says that the DSA does not really improve the ordeal victims go through.

Another issue with the settlement bodies is money. Users would have to pay to use these private arbitration courts (they would be reimbursed if the arbiter rules in their favor). With such a system, “fighting for one’s right to free speech [could become] a privilege for those who can afford it”, says Tiemo Wölken, a member of the European Parliament who was the rapporteur on the DSA for the Committee on Legal Affairs.

The DSA introduces an obligation to prevent abuse from people who report users in bad faith, such as the criminal groups we monitored. However, there is little chance that this mechanism will change much to the current situation, given that any report that is self-certified as being done in good faith is considered to be valid.

For Mr Wölken, while the DSA introduces much-needed safeguards, it gives little incentives for platforms to stop their practice of “removing first and asking questions later”.

* The names were changed to protect the privacy of the people we talked to.

The production of this investigation was supported by a grant from the IJ4EU fund. The International Press Institute (IPI), the European Journalism Centre (EJC)and any other partners in the IJ4EU fund are not responsible for the content published and any use made out of it.

Did you like this story?

Every two weeks, our newsletter Automated Society delves into the unreported ways automated systems affect society and the world around you. Subscribe now to receive the next issue in your inbox!