A guide to the EU’s new rules for researcher access to platform data

Thanks to the Digital Services Act (DSA), public interest researchers in the EU have a new legal framework to access and study internal data held by major tech platforms. What does this framework look like, and how can it be put into practice?

For years, major social media platforms like Instagram, TikTok, and YouTube have offered only limited transparency into how they design and manage their algorithmic systems—systems with unchecked power to influence what we see and how we interact with one another online. Platforms’ efforts to identify and combat risks like disinformation have likewise been practically impossible for third parties to scrutinize without reliable access to the data they need.

The Digital Services Act (DSA) promises to change this status quo, in part through a new transparency regime that will enable vetted public interest researchers to access the data of very large online platforms with more than 45 million active users in the EU (Article 40). Now that the law has been published in the EU’s official journal, we can visualize the blueprint for the DSA’s data access rules which are essential to holding platforms accountable for the risks they pose to individuals and society.

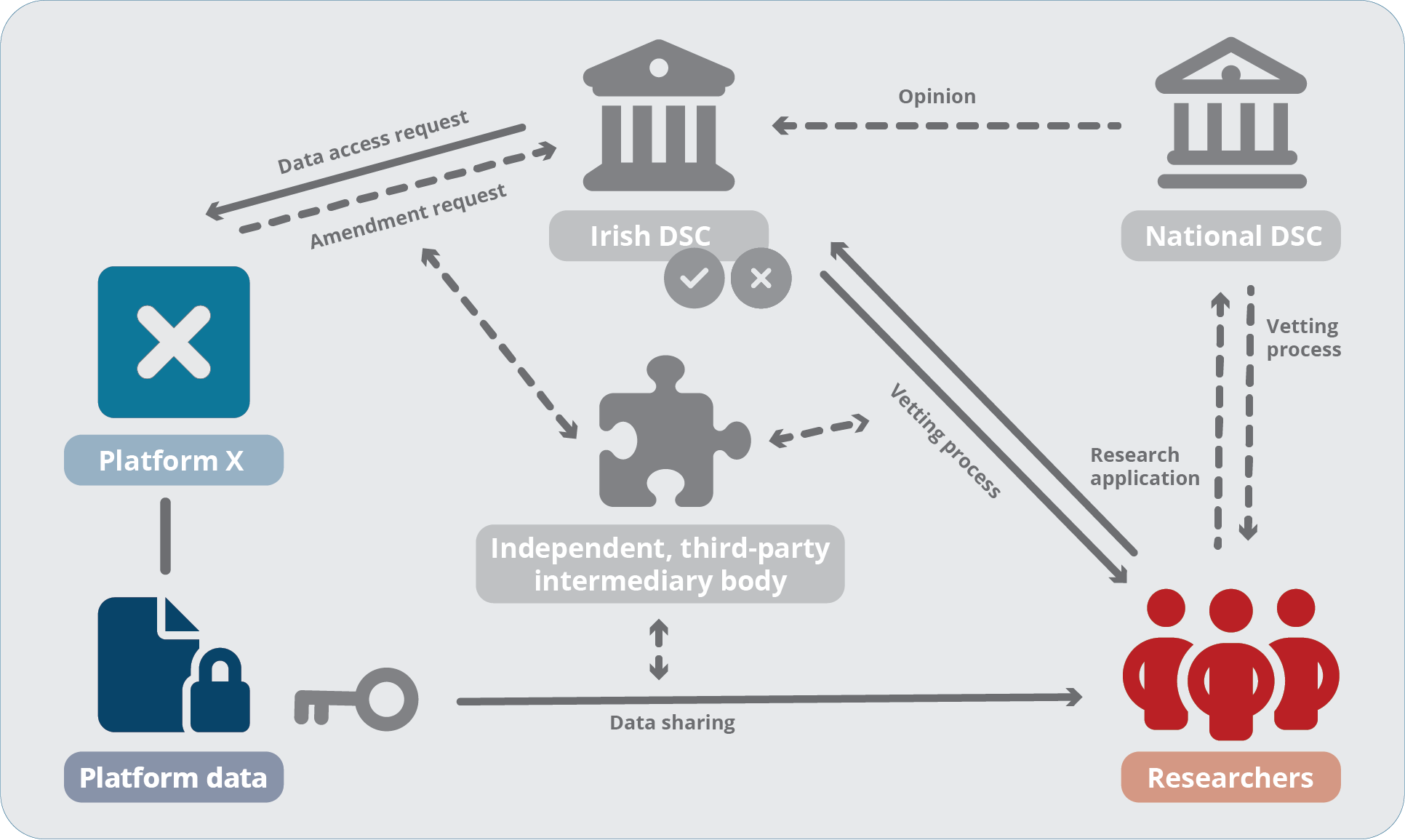

In this visual guide, we’ll walk through the data access regime envisioned by the DSA by following a hypothetical research team in their quest to access internal data held by “Platform X”. Along the way, we try to point out important elements which still need to be fleshed out before the promise of this new data access regime can be realized in practice.

How do researchers get access to platform data

Application process

First, let's imagine a team of public interest researchers. These researchers are concerned about the influence that, let’s say, the very large online “Platform X” may be having on political polarization. It has been suggested that Platform X exacerbates political polarization — a process which poses risks to democracy and fundamental rights by pushing people toward more extreme political views (indeed, there is a vast scholarship exploring the link between social media and political dysfunction). Our researchers want to study whether the design of Platform X’s recommender algorithm encourages users to interact with more polarizing political content, and to assess whether the platform has put adequate (and legal) safeguards in place to stop pushing this kind of content to vulnerable users.

Now that our researchers have a question which is tied to a particular systemic risk—in this case, risks to democracy stemming from political polarization—they need access to Platform X's internal data to prove or disprove their hypothesis. Specifically, they want to analyze the algorithms and training data that created the model for Platform X’s recommender system.

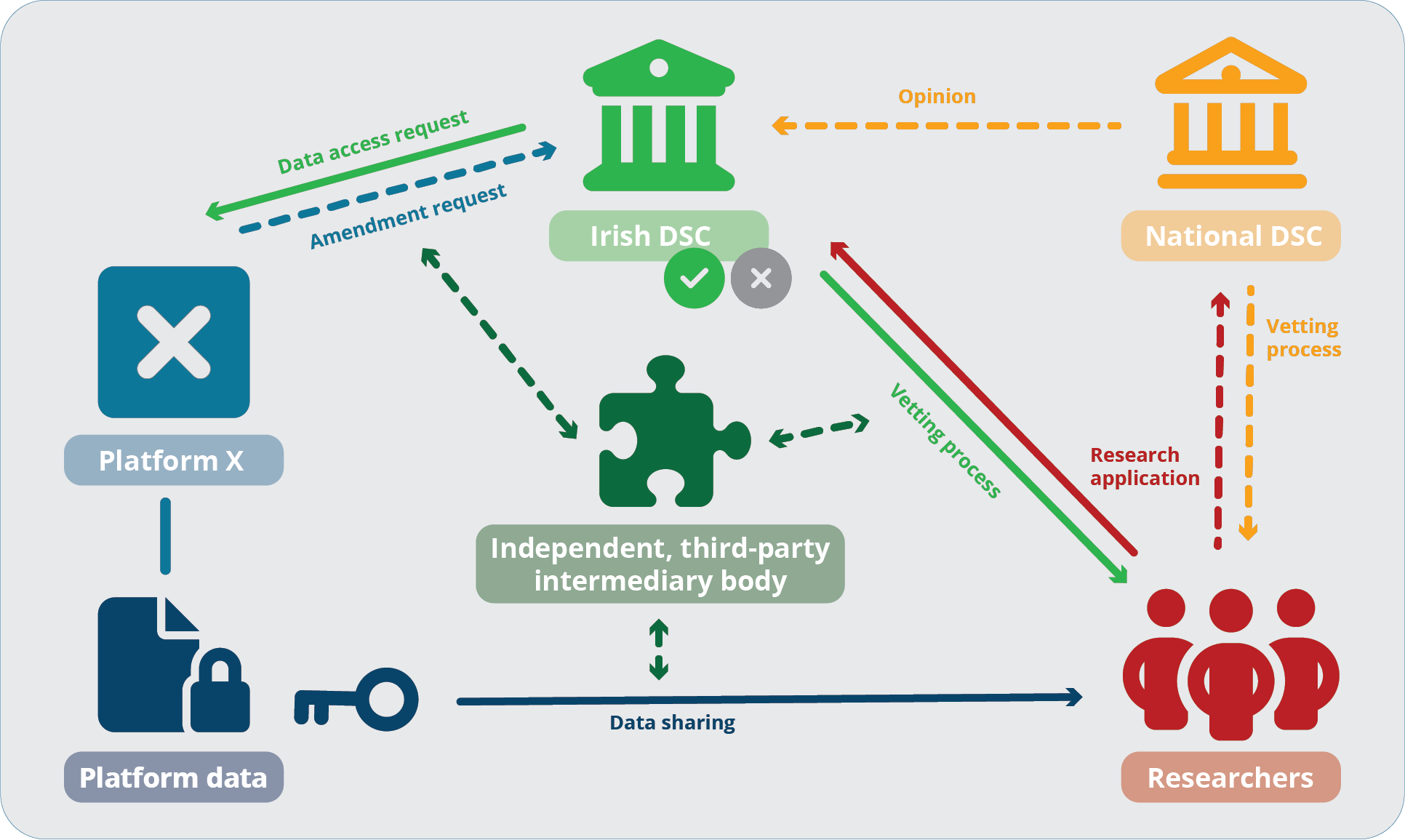

Now we have a scenario — a researcher (or research team) wants to study a particular systemic risk, and they need access to platform X’s data to understand it. In order to gain such access, step one — according to Article 40 of the DSA — is for these researchers to create a detailed research application laying out, among other things, why they need the data, their methodology, and their concept for protecting data security, and committing to sharing their research results publicly and free of charge.

The researchers also need to demonstrate that they are affiliated with either a university or a non-academic research institute or civil society organization that conducts “scientific research with the primary goal of supporting their public interest mission.” This might extend to consortia of researchers — including researchers not based in the EU and journalists — so long as the main applicant is a European researcher that fits the above criteria. So, let’s say that one of the main applicants in our research team is a post-doctoral researcher at a European university in Spain.

Vetting process

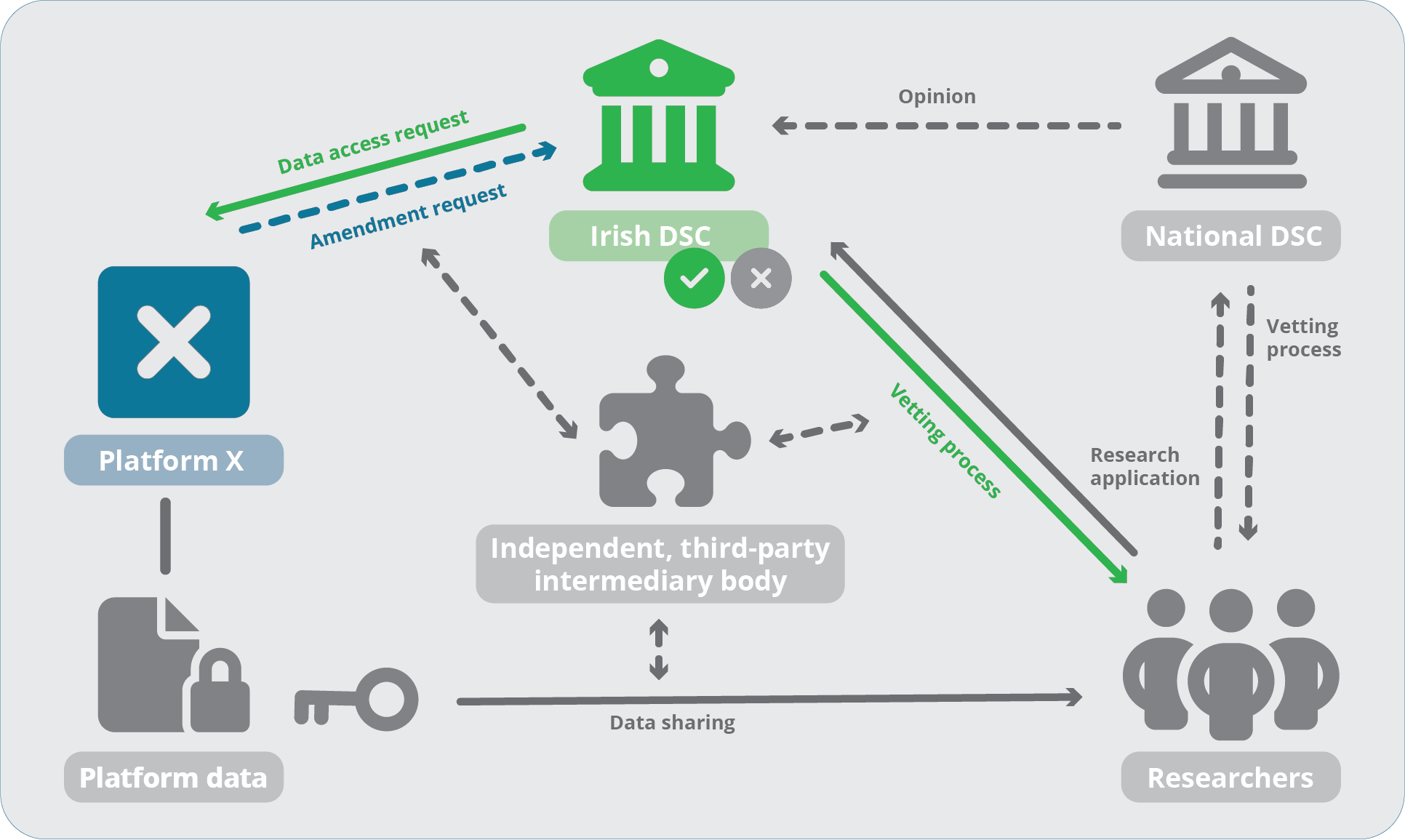

The next step is for the researchers to file their application with the responsible national regulator— the so-called Digital Services Coordinator (DSC) of Establishment—which will ultimately approve or reject the researchers’ application following an extensive vetting process. This process is designed to ensure that researchers are legitimately working in the public interest, meet the necessary criteria, and have adequate technical and organizational safeguards in place to protect sensitive data once they gain access.

Given that most of the largest platforms and search engines are based in Ireland, this means the Digital Services Coordinator of Establishment tasked with vetting researchers will likely be the Irish DSC in many cases. Let’s assume this is the case for Platform X.

Researchers may also send their applications to the national digital services coordinator in the country where they are based – in our fictional case, this would be the Spanish DSC. This national DSC would then issue an opinion to the Irish DSC about whether to grant the data access request – but ultimately, the Irish DSC will be the final decisionmaker in vetting most research proposals.

To be clear, these digital services coordinators don’t exist yet – EU member states will have to appoint them and make sure they’re up and running by the time the DSA becomes applicable across the EU in early 2024. Even in this nascent stage, the DSA’s drafters anticipated that vetting data access requests may prove to be a complex task for some national regulators, especially those with limited capacities, resources, or expertise in this domain – and the stakes are high, especially when it comes to vetting requests to access sensitive datasets to study complex research questions. That’s why the DSA provides in Article 40(13) a potential role for an “independent advisory mechanism” to help support the sharing of platform data with researchers.

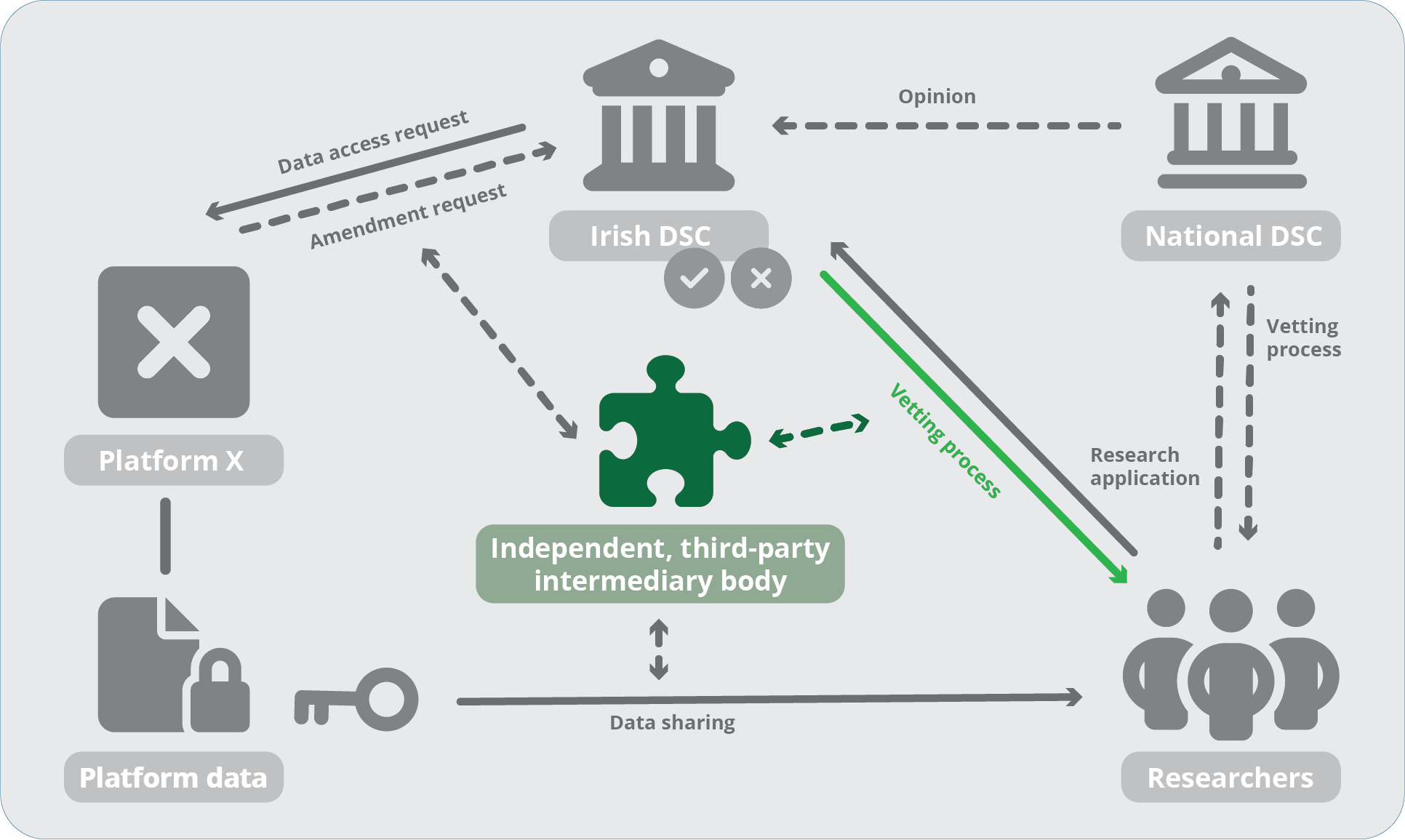

Independent advisory mechanism

The role of this advisory mechanism—as well as the technical conditions under which data may be safely shared and the purposes for which they may be used—still needs to be clarified by the Commission in forthcoming delegated acts. But it’s worth noting that the major platforms already committed to developing, funding, and cooperating a similar advisory mechanism—an “independent, third-party intermediary body”—precisely for this purpose when they signed onto the EU’s revised Code of Practice on Disinformation in June of 2022. A recommendation to establish such an intermediary is affirmed in the European Digital Media Observatory (EDMO)’s report on researcher access to platform data, which details how platforms may share data with independent researchers in a way that protects users’ rights in compliance with the General Data Protection Regulation (GDPR). AlgorithmWatch also published a similar recommendation in our Governing Platforms project, using examples from other industries to show how research access could be operationalized in platform governance (in our view, this intermediary body could even play a more central role, e.g., by maintaining access infrastructure or auditing disclosing parties).

So far, it’s unclear whether platforms are taking their commitments in the new Code of Practice on Disinformation seriously. We don’t yet know when to expect the independent intermediary body they promised in the Code, or whether this body will dovetail with the independent advisory mechanism referenced in the DSA. But its role in the proposed governance structure for platform-to-researcher data access means it is potentially an important puzzle piece in the overall data access regime – if it is equipped with a relevant and clear mandate, adequate resources, sufficient expertise, and genuine independence.

To recap: our research team has filed their application with the Irish Digital Services Coordinator. If the Irish DSC decides that the researchers and their application fulfill the necessary criteria, then the DSC may award them the status of "vetted researchers” for the purpose of carrying out the specific research detailed in their application. If the researchers filed their application with the DSC of the country where they are based—in this case, the Spanish DSC—then the decision of the Irish DSC may be informed by the assessment of the Spanish DSC. The vetting process may also be aided by an independent, third-party intermediary body.

Let’s say now that the DSC (again, in our case and probably most cases the Irish DSC) has finally approved the research application. Hurrah! Now the hard part is over, right?

Official request

Not necessarily. Once the research application is approved, the DSC will submit an official data access request to Platform X on behalf of the vetted researchers. The platform will then have 15 days to respond to the DSC’s request. If the platform says yes, it can provide the data as requested, then we keep moving right along. But, if the platform says that it can’t provide the data — either because it doesn’t have access to the requested data, or because providing access to the data carries a security risk, or could compromise “confidential information” like trade secrets — then the platform can seek to amend the data access request. If the platform goes this route, it needs to propose alternative means of providing the data or suggest other data that can satisfy the research purpose of the initial request. The DSC will then have another 15 days to either confirm or decline the platform’s amendment request.

There were serious concerns raised during the DSA negotiations that this vague, so-called “trade secret exemption” would make it too easy for platforms to routinely deny data access requests – something legislators tried to account for in the DSA’s final text, by clarifying that the law must be interpreted in such a way that platforms can’t just abuse the clause as an excuse to deny data access for vetted researchers. Just like with the vetting process, the challenge of parsing these amendment requests suggests that an independent body could have another key role to play here in advising the DSCs.

Data sharing

The process is nearly complete — once Platform X has either complied with the initial data access request or had its amendment request approved, we’ve finally reached the point where it must actually provide our researchers with access to the data specified in the research application. Now it’s up to our researchers to put their skills to work and show the public whether Platform X’s recommender algorithm is indeed pushing users to engage with more politically polarizing content, and to scrutinize whether the platform is taking the appropriate measures needed to mitigate the risk.

There are still many technical and procedural details that need to be worked out by the European Commission over the next year before the DSA’s data access regime can be fully implemented and enforced. EU member states still need to designate their digital services coordinators, and an independent, third-party intermediary that helps to facilitate data access requests has yet to materialize.

In the meantime, independent researchers at AlgorithmWatch and elsewhere will continue trying to understand the algorithmic decision-making systems behind online platforms and how they influence society, often using research methods that don’t rely on platforms’ internal data, such as through data donation projects and other adversarial audits. Adversarial audits have shown, for example, that Instagram disproportionately pushed far-right political content via its recommender system and that TikTok promoted wartime content that was supposedly banned to users in Russia – this kind of research will remain an important complement to regulated data access in the DSA to expose systemic risks, and must be protected given platforms’ track record of hostility toward such external scrutiny.

The potential of the DSA’s new transparency and data access rules is that these will allow public interest researchers to dig even deeper and help us gain a more comprehensive understanding of how platforms’ algorithmic systems work. Only once we have that level of transparency and public scrutiny can we really hold platforms accountable for the risks they pose to individuals and to society.

Read more on our policy & advocacy work on ADM in the public sphere.